AI tools like ChatGPT, Claude, and Gemini are amazing – they can write emails, answer questions, and even help with coding. But there’s one big problem: AI can sometimes invent details—it’s like guessing instead of knowing. This is called a hallucination.

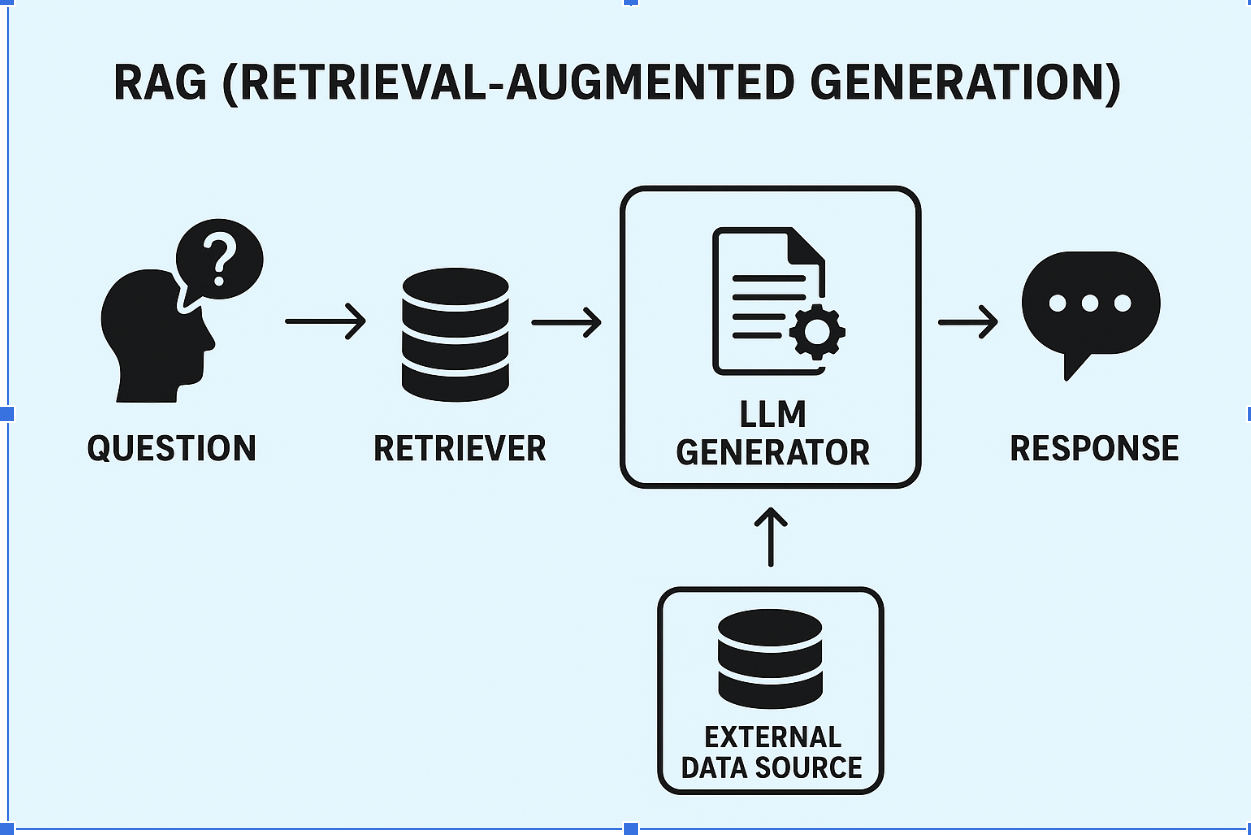

That’s why a new approach called RAG (Retrieval-Augmented Generation) is becoming popular. It helps AI give more accurate and reliable answers by connecting it to real data.

What is RAG?

RAG works in two simple steps:

Your Attractive Heading

- Find information – The AI looks into a knowledge source (like documents, websites, or databases) to find the right facts.

- Give an answer – The AI uses that information to create a proper response.

Think of it like an open-book exam. Instead of guessing from memory, the AI “opens the book” and finds the right answer.

Why is RAG Important?

- ✅ More accurate answers – fewer made-up facts.

- ✅ Latest knowledge – pulls from up-to-date data instead of old training.

- ✅ Trustworthy – can even show the source of the information.

- ✅ Easy for companies – no need to constantly retrain the AI model.

Where RAG is Used Today

- Healthcare – Doctors get answers based on recent medical studies.

- Legal – Lawyers can quickly find relevant case laws.

- Companies – Employees can ask questions about HR policies without reading long manuals.

Customer Support – Chatbots give correct answers directly from product documents. - HR Portal – HR admin can easily identify uploaded resume data using a single prompt.

The Challenges

RAG is powerful but not perfect. If the documents it examines are incorrect or unclear, the AI can still provide an inaccurate answer. It also needs a good setup and management.

Future of RAG

In the coming years, RAG will get even better:

- It will search not just text, but also images, videos, and audio.

- Companies will have clearer rules to measure accuracy.

- AI systems will combine RAG with other techniques for even smarter results.

👉 In simple words: If AI is the brain, RAG is the memory that makes sure it remembers the right things.

Tiny RAG App (Node + SQLite) — Step‑by‑Step

It shows you:

- Project setup (TypeScript + openai + better-sqlite3)

- How to chunk docs, embed them, and store embeddings in SQLite

- A simple top-K cosine retriever

- A chat call that answers strictly from sources and cites them like [file#chunk]

- Example data, commands to run, and common tweaks

A minimal, end‑to‑end Retrieval‑Augmented Generation (RAG) example using TypeScript, OpenAI embeddings + chat, and SQLite (via better-sqlite3).

Goal: Ingest a small folder of .txt/.md files, embed & store chunks in SQLite, then answer questions grounded in those files with citations.

1) Prereqs

- Node 18+

- An OpenAI API key in env var OPENAI_API_KEY

mkdir tiny-rag && cd tiny-rag

npm init -y

npm i openai better-sqlite3 dotenv

npm i -D typescript ts-node @types/node

npx tsc –init –rootDir src –outDir dist –esModuleInterop –resolveJsonModule –module commonjs –target es2020

mkdir -p src data

Create .env in project root:

OPENAI_API_KEY=YOUR_KEY_HERE

EMBED_MODEL=text-embedding-3-small

CHAT_MODEL=gpt-4o-mini

Add scripts to package.json:

{

“scripts”: {

“ingest”: “ts-node src/ingest.ts”,

“ask”: “ts-node src/ask.ts”

}

}

2) Data: drop a couple of files in ./data

data/faq.txt

Product X supports offline mode. Sync runs automatically every 15 minutes or when the user taps “Sync Now”. Logs are saved in logs/sync.log.

data/policies.md

# Leave Policy (2024)

Employees can take 18 days of paid leave per calendar year. Unused leave does not carry over. For emergencies, contact HR at hr@example.com.

Feel free to replace with your own docs.

3) src/db.ts — tiny SQLite helper

import Database from ‘better-sqlite3’;

const db = new Database(‘rag.sqlite’);

db.exec(`

PRAGMA journal_mode = WAL;

CREATE TABLE IF NOT EXISTS documents (

id INTEGER PRIMARY KEY,

path TEXT UNIQUE,

content TEXT

);

CREATE TABLE IF NOT EXISTS chunks (

id INTEGER PRIMARY KEY,

doc_id INTEGER NOT NULL,

idx INTEGER NOT NULL,

text TEXT NOT NULL,

embedding BLOB NOT NULL,

FOREIGN KEY(doc_id) REFERENCES documents(id)

);

CREATE INDEX IF NOT EXISTS idx_chunks_doc ON chunks(doc_id);

`);

export default db;

4) src/util.ts — chunking & cosine

export function chunkText(text: string, chunkSize = 800, overlap = 150): { idx: number; text: string; start: number; end: number }[] {

const clean = text.replace(/\r/g, ”);

const chunks: { idx: number; text: string; start: number; end: number }[] = [];

let i = 0, idx = 0;

while (i < clean.length) {

const end = Math.min(i + chunkSize, clean.length);

const slice = clean.slice(i, end);

chunks.push({ idx, text: slice, start: i, end });

idx++;

i = end – overlap;

if (i < 0) i = 0;

}

return chunks;

}

export function toBlob(vec: number[] | Float32Array): Buffer {

const f32 = vec instanceof Float32Array ? vec : Float32Array.from(vec);

return Buffer.from(f32.buffer);

}

export function fromBlob(buf: Buffer): Float32Array {

return new Float32Array(buf.buffer, buf.byteOffset, buf.byteLength / 4);

}

export function cosineSim(a: Float32Array, b: Float32Array): number {

let dot = 0, na = 0, nb = 0;

for (let i = 0; i < a.length; i++) { dot += a[i]*b[i]; na += a[i]*a[i]; nb += b[i]*b[i]; }

return dot / (Math.sqrt(na) * Math.sqrt(nb) + 1e-8);

}

5) src/openai.ts — client

import ‘dotenv/config’;

import OpenAI from ‘openai’;

export const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

export const EMBED_MODEL = process.env.EMBED_MODEL || ‘text-embedding-3-small’;

export const CHAT_MODEL = process.env.CHAT_MODEL || ‘gpt-4o-mini’;

6) src/ingest.ts — read files → chunks → embeddings → SQLite

import fs from ‘fs’;

import path from ‘path’;

import db from ‘./db’;

import { openai, EMBED_MODEL } from ‘./openai’;

import { chunkText, toBlob } from ‘./util’;

const DATA_DIR = path.resolve(‘data’);

async function embed(texts: string[]): Promise<number[][]> {

const res = await openai. embeddings.create({

model: EMBED_MODEL,

input: texts

});

return res.data.map(d => d.embedding as number[]);

}

function* iterFiles(dir: string): Generator<string> {

for (const entry of fs.readdirSync(dir, { withFileTypes: true })) {

const p = path.join(dir, entry.name);

if (entry.isDirectory()) yield* iterFiles(p);

else if (p.endsWith(‘.txt’) || p.endsWith(‘.md’)) yield p;

}

}

(async () => {

if (!fs.existsSync(DATA_DIR)) throw new Error(`Missing data dir: ${DATA_DIR}`);

const upsertDoc = db.prepare(‘INSERT INTO documents(path, content) VALUES (?, ?) ON CONFLICT(path) DO UPDATE SET content=excluded.content RETURNING id’);

const delChunks = db.prepare(‘DELETE FROM chunks WHERE doc_id = ?’);

const insertChunk = db.prepare(‘INSERT INTO chunks (doc_id, idx, text, embedding) VALUES (?, ?, ?, ?)’);

for (const file of iterFiles(DATA_DIR)) {

const content = fs.readFileSync(file, ‘utf8’);

const { id: docId } = upsertDoc.get(file, content) as { id: number };

delChunks.run(docId);

const chunks = chunkText(content, 800, 150);

const embeddings = await embed(chunks.map(c => c.text));

const tx = db.transaction(() => {

for (let i = 0; i < chunks.length; i++) {

const c = chunks[i];

const e = embeddings[i];

insertChunk.run(docId, c.idx, c.text, toBlob(e));

}

});

tx();

console.log(`Ingested ${file} → ${chunks.length} chunks`);

}

console.log(‘Done.’);

})();

7) src/ask.ts — retrieve top‑K → answer with citations

import db from ‘./db’;

import { openai, CHAT_MODEL, EMBED_MODEL } from ‘./openai’;

import { cosineSim, fromBlob } from ‘./util’;

async function embedQuery(q: string): Promise<Float32Array> {

const r = await openai.embeddings.create({ model: EMBED_MODEL, input: q });

return Float32Array.from(r.data[0].embedding as number[]);

}

function retrieveTopK(qVec: Float32Array, k = 5) {

const rows = db.prepare(`

SELECT chunks.id, chunks.idx, chunks.text, chunks.embedding, documents.path AS path

FROM chunks JOIN documents ON chunks.doc_id = documents.id

`).all();

const scored = rows.map(r => ({

path: r.path as string,

idx: r.idx as number,

text: r.text as string,

score: cosineSim(qVec, fromBlob(r.embedding as Buffer))

}));

scored.sort((a,b) => b.score – a.score);

return scored.slice(0, k);

}

function buildContext(chunks: { path: string; idx: number; text: string }[]): string {

return chunks.map(c => `SOURCE: [${c.path}#${c.idx}]\n${c.text}`).join(‘\n\n—\n’);

}

async function answer(question: string) {

const qVec = await embedQuery(question);

const top = retrieveTopK(qVec, 5);

const context = buildContext(top);

const sys = `You are a precise assistant. Answer ONLY using the provided SOURCE context. If the answer is not in the sources, say you don’t know. Cite sources inline like [filename#idx].`;

const res = await openai.chat.completions.create({

model: CHAT_MODEL,

temperature: 0.1,

messages: [

{ role: ‘system’, content: sys },

{ role: ‘user’, content: `SOURCES:\n\n${context}\n\nQUESTION: ${question}` }

]

});

const text = res.choices[0]?.message?.content?.trim() || ‘(no answer)’;

console.log(‘\n— ANSWER —\n’);

console.log(text);

console.log(‘\n— CITED CHUNKS —‘);

for (const c of top) console.log(`[${c.path}#${c.idx}] score=${c.score.toFixed(3)}`);

}

const q = process.argv.slice(2).join(‘ ‘).trim();

if (!q) {

console.error(‘Usage: npm run ask — “your question”‘);

process.exit(1);

}

answer(q).catch(err => { console.error(err); process.exit(1); });

8) Run it

# 1) Embed & store your docs

npm run ingest

# 2) Ask questions grounded in your docs

npm run ask — “How many paid leave days do employees get?”

# → Expect something like: “Employees get 18 days of paid leave per year [data/policies.md#0]”

npm run ask — “Where are sync logs stored?”

# → “Logs are saved in logs/sync.log [data/faq.txt#0]”

9) What you just built (RAG loop)

- Retrieve: embed the user question → compute cosine similarity against stored chunk embeddings → select top‑K.

- Augment: stuff those top‑K chunks into the prompt as SOURCES.

- Generate: ask the chat model to answer strictly from SOURCES and cite them.

10) Useful extensions (optional)

- Better retrieval: add metadata filters (per doc type), or hybrid search (BM25 + embeddings).

- Streaming: swap to responses API streaming for faster UX.

- Longer contexts: dedupe/merge overlapping chunks, re‑rank with a small cross‑encoder.

- Vector DB: replace SQLite scan with pgvector/Chroma/Weaviate/Pinecone for scale.

- File types: parse PDFs (e.g., pdf-parse), HTML, CSVs.

- Eval: keep a small QA set; measure accuracy grounded in your docs.

11) Troubleshooting

- If answers contain facts not in sources, lower the temperature and make the system message stricter.

- If citations look off, increase k and/or decrease chunkSize a bit (e.g., 600/120).

- Unicode in Windows terminals can mangle BLOB prints—don’t console.log embeddings.

You now have a tiny but real RAG app. Swap the data/ folder with your HR/Support docs and go!

Conclusion

Retrieval-Augmented Generation (RAG) is emerging as one of the most effective ways to make AI more accurate, dependable, and up to date. Instead of relying only on what a model was trained on, RAG connects AI to real information sources, reducing errors and making responses easier to trust. Although it requires careful setup and quality data, the advantages—such as fewer hallucinations, current knowledge, and easier maintenance—make it a powerful approach for organizations of all sizes.

The Tiny RAG App shows how this can work in practice: documents are broken into chunks, turned into embeddings, stored in SQLite, and then retrieved to give fact-based, cited answers. Even with a small dataset, this workflow demonstrates how RAG delivers grounded, reliable results.

👉 Looking ahead, AI will succeed not by working in isolation but by combining reasoning with knowledge. RAG provides that bridge. And for teams that want to build such practical, scalable applications, Logical Wings—one of the top web app development companies—can help transform your ideas into scalable solutions.

Contact us on: +91 9665797912

Please email us: contact@logicalwings.com